Google Deepmind’s senior clinical scientist says AI will not “deskill” workers

- 24 May 2019

Just as the thermometer didn’t destroy healthcare as we know it, artificial intelligence (AI) will not “deskill” workers, according to Google Deepmind’s senior clinical scientist.

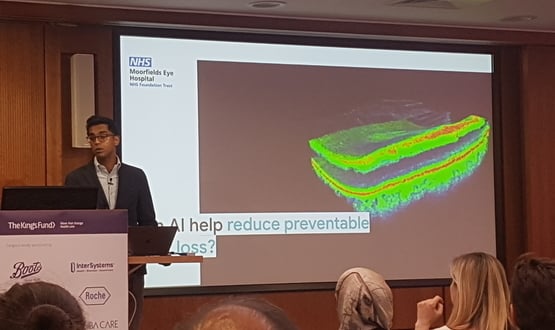

Speaking at The Kings Fund Digital Health and Care Congress, Dr Alan Karthikesalingam spoke of how AI can transform the lives of over-stretched NHS clinicians.

He addressed the key machine learning projects Google DeepMind is working on, including their work at Moorfield’s Eye Hospital detecting age-related macular degeneration; working with Cancer Research UK to better detect breast cancer; and recently published research using machine learning to quickly identify lung cancers.

He later took part in a panel discussion on the “view from the front line” alongside representatives from mHabitat, South Central and West Commissioning Support Unit and Lancashire Care NHS Foundation Trust.

Dr Karthikesalingam was asked if, as commonly reported in tabloid media, machine learning and artificial intelligence would “deskill” clinicians because “the robots are coming”.

Referring to how the thermometer was seen as a threat to healthcare when it was first invented, Dr Karthikesalingam reassured that clinicians need not worry about their jobs being taken.

“There’s been some fantastic articles comparing machine learning in healthcare to the advent of the thermometer, which was actually viewed as a tremendous threat to the medical profession and there was this huge divide between clinicians who felt the need to measure someone’s temperature and those who could do it intuitively,” he told the audience in London.

“Obviously there are well founded concerns about automation bias but I think the reality, at least in my experience, is that specialists have tremendous professional codes of conduct and excellent programmes for how skills are developed but also how they change over time.

“The idea, at least behind all of the work we are doing, is to produce tools that would not deskill people but would hopefully help them be at the top of their skills most of the time.”

Referring back to his time as a surgeon Dr Karthikesalingam recalled countless times trying to connect to WiFi or sorting through “reams of paper” trying to find old surgical notes.

“A lot of this [machine learning] is going to be about how these systems are built and designed so they actually alleviate those pain points to enable more of the nurses and doctors time to be spent exercising the real specialist skills that they’ve trained so long to do,” he added.

“I would say that the potential risk is balanced by the potential benefits of machine learning and digital health when done well and when done with users at the heart.

“It does the opposite really, it upskills people and allows them to spend more of their day at the absolute peak of their professional abilities.”

Shauna McMahon, partner in digital transformation consulting for South Central and West Commissioning Support Unit, said that clinicians should expect that technology is going to change the provision of healthcare, but that it wasn’t necessarily a bad thing.

“Every other industry has fundamentally changed. I don’t think it’s fair to sit in healthcare and pretend that there will not be a fundamental shift in the skills that will be required,” she said.

“With AI probably soon you’ll be able to read slides and lab results and have computers be able to tell you what it is. Pathologists years ago were seeing that their roles were going to have to change and they would need to do differently.

“I expect there not to be a deskilling but a different skilling to work in the world of technology.”

Artificial intelligence has become a buzzword in healthcare in recent years. Health leaders, researchers and business are all looking into the benefits and risks of the technology.

In February the Academy of Medical Royal Colleges warned AI isn’t going to solve all the problems facing the healthcare sector and identified seven key recommendations for politicians, policy makers and service providers to follow.

This included that such figures and organisations “should avoid thinking AI is going to solve all the problems the health and care systems across the UK are facing”.

The same report, commissioned by NHS Digital, warned a line “needs to be drawn” to establish accountability between clinicians and AI, as clinicians may find themselves incorrectly trusting decisions made by the technology more than they trust their own.

1 Comments

Bring IT on but I would add the NHS needs “technical” doers just as much as it needs “clinical” doers working within the NHS, talking directly with each other, that is the best way to get the most out of IT, particularly DATA.

Comments are closed.